Grokking Cartesi Public Goods: Dave

A look into a public good developed by Cartesi that solves common issues in fraud-proof protocols that validate L2 claims on a main L1 chain.

Written By EDUARDO TIÓ

Blockchain technology is on the brink of a revolution. More and more projects now understand the need for modularity and specialization. Popular layer ones are shifting their focus to data availability, with the goal of supporting orders of magnitude more data than previously possible.

Meanwhile, execution environments and computational layers that plan on scaling computational power through rollups (either optimistic, ZK or sovereign) have the responsibility of matching the increased data capabilities and offering an infrastructure robust enough for real applications to be developed.

The setup that can deliver the most significant gains in computational scalability is given by: application-specific optimistic rollups with interactive dispute resolutions. At the same time, the gains in computational scalability make it possible to increase programmability and improve tooling significantly.

Cartesi has chosen this very path, giving developers much cheaper computations and the possibility to build robust smart contracts using existing open-source libraries and components inside real-world operating system runtimes.

The multiple technological difficulties faced by blockchain dApps stand out when we analyze their codebase from a software engineering standpoint. Projects like Uniswap are skillfully crafted to balance several competing goals: monetary value to their users, extreme minimization of gas consumption, and security. Applications failing to fulfill these criteria compromise their adoption, put their users at risk, or lose in the fierce bidding war for block space. This scenario is inhospitable for applications and hinders innovation.

In addition, compared to traditional Web 2.0 back-end services, the experience of coding smart contracts is overwhelmingly constrained. Saying that there is a considerable distance between the capabilities of conventional web servers and blockchain smart contracts is an understatement.

Ethereum and EVM rollups are decentralized computers that force you to contend with the aspects above. They’re exceedingly slow and “peculiar computers” that require developers to code on niche programming languages.

In this weird setup, developers spend their efforts on overcoming these limitations instead of optimizing the core of their solutions. The result often is nonessential, overly complicated code around simple and limited features.

A network where everyone verifies everything is not sustainable for mass adoption. In global consensus, the increase in demand inevitably leads to cannibalistic fights among applications for blockspace. This scenario degenerates into high fees, posing an ever-growing entry barrier to projects and users alike. To address this struggle, Ethereum has pivoted, proposing a rollups-centric roadmap.

The new plan recognizes that the scalability problem comprises two major aspects: data scalability and computation scalability. The distinction between these two is often overlooked as they are currently entangled in the same notion of gas costs. It was by distinguishing them, however, that Ethereum envisioned its current roadmap.

After the merge, and with the developments on EIP-4844 and sharding Ethereum will reduce the cost of adding data to its blockchain by several orders of magnitude. Meanwhile, computational scaling has been delegated to rollup projects (hence the name rollup-centric).

The relationship between the Ethereum protocol and rollup solutions hides an issue that doesn’t get the attention it deserves. EVM-compatible rollups are not the best design to attain the computation scalability to match the large gains in data availability Ethereum will achieve.

EVM-based rollups can be pictured as computational shards. The design’s flaws come up as more and more applications are gradually deployed and share the same VM. The zero-sum fight for a slice of the VM’s CPU capacity leads to gentrification. Only a tiny fraction of applications are viable on each shard; the others are driven out. It is just a matter of time before these networks become congested and expensive.

Fortunately, it is possible to understand and use rollups differently. Letting go of shared VMs, allow applications to have their own CPU and the exceedingly high computational performance that comes with it. Asset settlement, composability between applications, and dispute resolution can be delegated to a general-purpose base layer. This design is called application-specific rollups.

The dedicated consensus of application-specific rollups is pegged to the base layer, allowing their validator nodes (permissioned or not) to retain its settlement layer’s security guarantees. In other words, having a base layer makes it possible for a 1-of-N security model, where any honest validator can, with the help of the base layer, enforce a correct result independently of cooperation. At the same time, application-specific consensus makes it possible for applications to enjoy the (unshared) full power of the hardware. Not only avoiding the problem of network gentrification but also providing a significant gain in computational scalability.

The shift from a shared to an application-specific consensus doesn’t come without consequences. While this design choice implies higher friction to composability between applications, we argue that this is a less relevant concern for most of them. Having to wait for their communication to be verified or relying on a soft finalization technique (i.e. liquidity providers) is not a big compromise in return for the massive improvements in computational power and predictability offered by app-specific chains.

In particular, the option for optimistic rollups with interactive fraud-proof gives decentralized applications computational resources comparable to mainstream (e.g., involving billions of instruction steps and large memory address spaces) without needing special hardware to attain consensus. This is only possible because interactive fraud-proofs allow arbiters with limited computational resources to referee disputes between computationally unlimited provers. In particular, our arbiter is a resource-limited settlement layer, and our provers are rollup validators with comparably unlimited computational resources. To better understand how this is possible, refer to section 5.2 of Cartesi Core’s technical paper.

In their search for maximum scalability and customizability, application and protocol developers are turning their attention to different forms of application-specific chains. A few examples are: Axie Infinity’’s Ronin sidechain, dYdX’s sovereign chain, Starkware’s fractal scaling design, Celestia’s modular execution layers.

Application-specific rollup chains can fill this demand, with the advantage of not incurring the dangerous fragmentation of validation of sovereign (layer one) application-specific chains. Instead, application-specific rollup chains inherit the robust security guarantees of the underlying base layers without depending on cross-chain bridges, which have proven to be dangerous.

The technological advantage of rollup app-chains stem from the fact that they are safe with 1-of-N honest parties rather than the necessity of an honest majority. In sum, application-specific rollups are just as good as application-specific sidechains, without the heavy compromises in security.

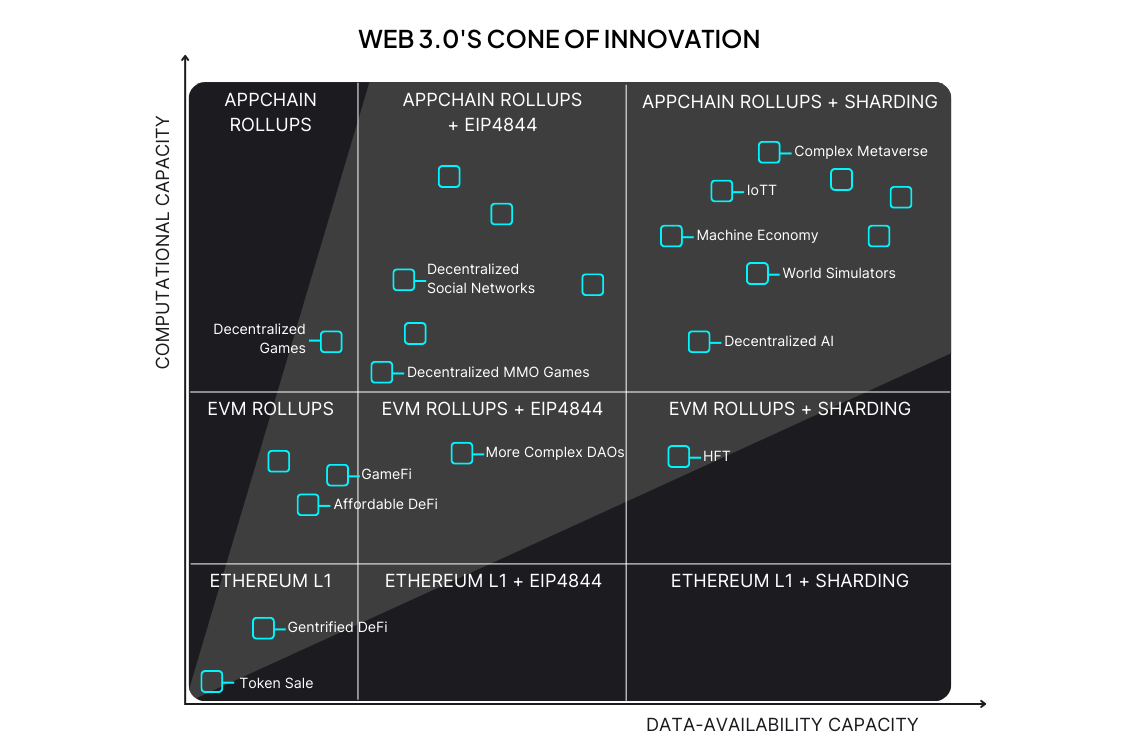

The effects of concomitantly scaling computational and data-availability capacity can be better visualized with the help of the figure above.

The figure is divided into major areas, representing the scaling solutions being combined and how they perform in terms of computational and data capacity. Computational capacity improves as we move from ethereum layer one to EVM rollups and finally to dedicated appchains, while data improves with the addition of EIP-4844 and sharding. The blue cone shows which applications become progressively possible with the scalings in both dimensions. We call the blue area web3’s cone of innovation.

The gray areas outside the cone is where the gains in data-availability can’t be fully enjoyed because the solutions lack computational capacity and vice-versa. The small white squares are examples of applications that start to become possible when we reach those milestones — the unlabeled ones remind us that we have no idea what cool new applications will emerge once the environment is more robust.

The innovation cone is not meant to be precise. Its direction and opening angle are not to be taken literally. Moreover, the applications that become possible in each region are prone to fall in different areas. The figure intends merely to provide an intuitive outlook on the growing horizon of innovation for decentralized applications.

Besides the computational constraints described above, developers of dApps face another enormous burden: the lack of a mature environment, in the form of insufficient software tooling and libraries.

To better illustrate this problem, let us mention one of the most impressive decentralized games we have come across in recent days, Topology. This ambitious project mixes strategic infrastructure building with planetary dynamics! Crazy stuff. However, just by looking at their source code, we see the beasts they had to slay. To give a single example, they had to develop a classical algorithm for simulating planetary dynamics from scratch . Behind the impressive talent the Topology team has displayed, there is a troubling concern: only exceptional developers can bring their ideas into reality in such an immature environment.

The above example is far from unique. So many libraries (ex.: 1, 2, 3, 4, 5, 6) are being written in Solidity to aid the development of smart contracts and dApps. But the current state of the language is still very immature, with some basic tasks still requiring people to resort to forums, in search of help.

This is not the reality in the traditional software industry. The game Angry birds, for example, needed the same libraries as Topology (after all, planets and flying birds follow the same laws of physics). However, the developers behind Angry Birds were not forced to write every line of code they needed from scratch. There are existing libraries for this in basically every language imaginable!

The one thing that makes it possible for traditional developers to have access to all of these libraries is the golden standard for solving the programmability problem: a full-fledged operating system. Developers working on all fields, ranging from Web2 to traditional games and all the way to satellite launching, count on an operating system to give them the support they need. The languages and the libraries they need to materialize their ideas is what allows them to focus their efforts on what they really want to build, rather than the underlying infrastructure that makes it possible.

This is why we have chosen the RISC-V architecture to build our rollups solution. It makes it possible to port Linux or other operating systems into rollups. This way, developers can bring their ideas to life in their favorite languages and libraries, without giving up on the solid security guarantees of the blockchain, as detailed in previous articles (1, 2, 3).

Up to this point, Linux has been the focus, but it is possible to run any operating systems that can be compiled to RISC-V, such as some very secure micro kernels out there.

We first discussed the importance of a modular rollup execution layer that truly scales computation and prevents dApps from engaging with each other in a zero-sum game for computational resources. Then we elaborated on how important it is for developers to count on the abstraction power of an OS like mainstream developers do.

It was with these two needs in mind that we have designed and built Cartesi Rollups as a modular execution layer that gives the following scaling benefits for dApps:

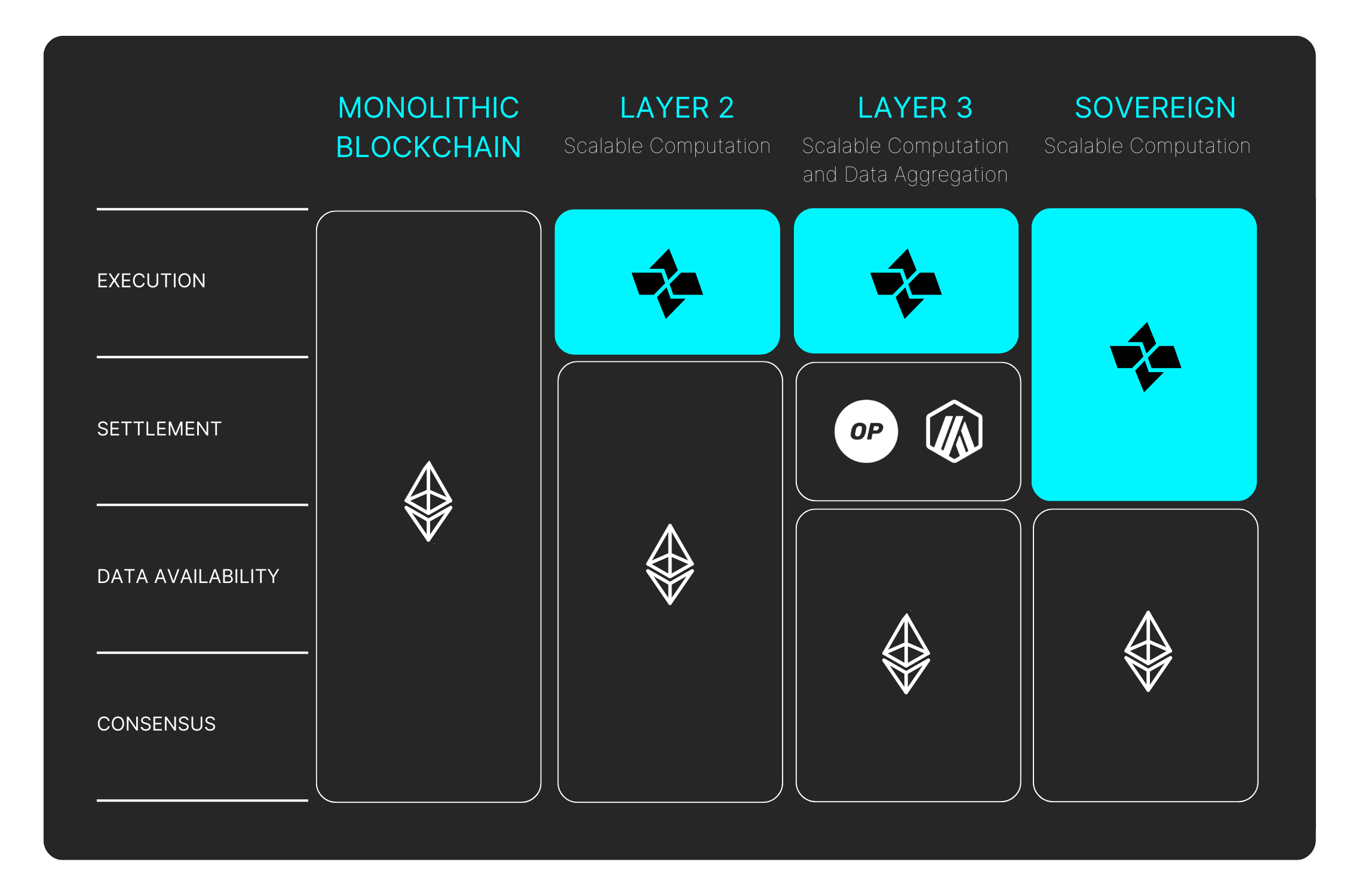

Cartesi Rollups applications can be used as a layer two (i.e on top of Ethereum), as layer three (i.e on top of Arbitrum or ZK-EVM chains) or as sovereign rollups (i.e on top of Celestia). Developers can port their applications from one platform to another with minimal code changes.

Cartesi allows developers to focus on what they’re building instead of where they’re building or what inconvenient limitations they will need to contend with.

Innovation can then spur without popular applications cannibalizing less established ones. Decentralized applications can have all the computing power they need while maintaining cost predictability. Developers can leverage battle-tested programming libraries and create decentralized MMORPG’s that are actually fun and where killing a goblin doesn’t cost players 3 dollars!

From a customizability perspective, Cartesi Rollups app-chains give dApps the possibility of charging different prices for different actions. They could, for instance, waive the gas fees for market makers on their decentralized exchanges or increase the cost of predatory fishing on their ocean simulator dApp.

Cartesi has a very clear vision of the revolution that is about to take place in decentralized technologies. Cartesi Rollups is being developed as a laser-focused answer to the needs of this new environment.

Join our newsletter to stay up to date on features and releases

A look into a public good developed by Cartesi that solves common issues in fraud-proof protocols that validate L2 claims on a main L1 chain.

Written By EDUARDO TIÓ

An overview of Cartesi’s block explorers, CartesiScan and Cartesi Explorer, including their features and their specific roles in the wider ecosystem.

Written By EDUARDO TIÓ

Diving into Cartesi Nodes as a fundamental part of the Cartesi ecosystem, looking into why and how they support Cartesi Rollups and the CVM.

Written By EDUARDO TIÓ

© 2024 The Cartesi Foundation. All rights reserved.